Conversational Tutors for XR Training: LUMINOUS Advances at IWSDS 2025

Bilbao, May 2025 – The LUMINOUS Horizon project continues to push the boundaries of human–AI interaction in Extended Reality (XR). At the 15th International Workshop on Spoken Dialogue Systems (IWSDS 2025), consortium researchers presented a compelling study titled “Conversational Tutoring in VR Training: The Role of Game Context and State Variables.” This work marks a meaningful step toward the project’s vision of creating language-augmented XR systems powered by situation-aware, generalizing AI.

What the Study Explores

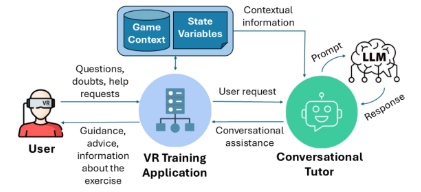

The paper investigates how Large Language Models (LLMs) can serve as conversational tutors within immersive VR training environments—specifically in health and safety training scenarios.

The research explores how task performance improves when the conversational tutor is given access to:

- Game context – such as current goals or hazards in the VR environment.

- State variables – like the learner’s current progress or recent actions.

The study compares zero-shot and few-shot prompting techniques to inject this contextual information into the tutor’s responses, enabling the LLM to generate more precise, helpful guidance.

Key Findings

- Performance boost through context: Adding game context and learner state data significantly improves LLM response accuracy (up to +0.26 on a 0–1 scale).

- Human evaluation confirmed: Raters consistently preferred responses that included situational awareness, confirming their clarity and relevance.

- No fine-tuning required: The enhancements were achieved through smart prompting alone—pointing to scalable, low-overhead deployment.

Strategic Fit with LUMINOUS Goals

This work directly supports LUMINOUS Horizon’s vision of language-augmented XR systems that adapt to unseen environments and evolving user needs through natural interaction.

Key contributions aligned with project goals:

- Context-driven reasoning: The LLM uses dynamic game and user state data to adapt responses in real time.

- No hardcoded behavior: Guidance emerges from the model’s reasoning, not from scripted dialogue paths.

- Generalisation to novel situations: The system supports user interaction in scenarios not predefined during development.

- Natural task completion: Users can accomplish unfamiliar tasks through intuitive, context-aware communication.

The study helps lay the foundation for adaptive, multimodal XR platforms that respond intelligently to the complexity and unpredictability of real-world environments.

Next Steps in the LUMINOUS Journey

The study sets a clear trajectory for the project’s next phase:

- Embed LLM tutors in XR prototypes developed under LUMINOUS

- Refine prompting pipelines to optimize tutor behavior across diverse use cases

- Enhance multimodal communication, combining speech, visual aids, and avatars

- Evaluate across sectors, including health, education, and industrial training

Conclusion

The IWSDS 2025 paper showcases how natural language and situational intelligence can converge to make XR experiences truly interactive, intelligent, and user-centered. As LUMINOUS works to create a future where XR systems are adaptable, personalized, and communicative, this work provides a strong foundation for language-powered, cognitively inspired training environments.

We congratulate the authors for advancing the frontier of human-AI collaboration in immersive learning.

Read the paper: “Conversational Tutoring in VR Training: The Role of Game Context and State Variables,” IWSDS 2025.

Learn more about the project: https://luminous-horizon.eu

LUMINOUS — Building the next generation of Language-Augmented XR through cognitive AI, immersive environments, and human-centric design.